AI Art Weekly #24

Hello there my fellow dreamers and welcome to issue #24 of AI Art Weekly! 👋

I openend up a Discord server. If you’re looking to chat with some like-minded creators about AI art, animation, games, movies or whatever peaks your interest, come join us. In there we also brainstorm about the weekly challenge themes, give each other feedback on art pieces and share the latest shiny papers and tools that make their way into this newsletter. Don’t be a stranger!

This weeks highlights include:

- GigaGAN: Large-scale GAN for Text-to-Image Synthesis

- Word-As-Image turns letters into semantic visuals (perfect for logo design?)

- Interview with artist and Cannes short film winner Glenn Marshall

- New ControlNet models for style and palette guidance

- ControlNet models for Stable Diffusion v2.1

Cover Challenge 🎨

The challenge for this weeks cover was “fashion” which received 82 submissions from 44 different artists. The community decided on the final winner:

Congratulations to @trevorforrestm1 for winning the cover art challenge 🎉🎉🎉 and a big thank you to everyone who found the time to contribute!

The next challenges theme is “horror”, I don’t think a lot of explanations are needed for this one, just make sure it’s not too gory. The reward is another $50. Rulebook can be found here and images can be submitted here.

The new Discord is also a great place to ask and receive feedback to your challenge submissions.

I’m looking forward to all of your artwork 🙏

If you want to support the newsletter, this weeks cover is available on objkt for 5ꜩ a piece. Thank you for your support 🙏😘

Reflection: News & Gems

GigaGAN: Large-scale GAN for Text-to-Image Synthesis

GANs are back with a bang baby! Training GANs with large datasets like LAION didn’t work well so far, GigaGAN aims to solve this by introducing a new architecture that far exceeds the old limits, bringing back GANs as a viable option for text-to-image synthesis. There are three major advantages: It is orders of magnitude faster at inference time, taking only 0.13 seconds to synthesize a 512px image. It can synthesize high-resolution images, for example, 16-megapixel pixels in 3.66 seconds. Finally, GigaGAN supports various latent space editing applications such as latent interpolation, style mixing, and vector arithmetic operations. Super exciting! Always wanted to get into StyleGAN interpolations, I guess now I’ll finally get to do that, no more excuses, except one, someone needs to open source some code 😅. So fingers crossed 🤞

Disentangled GigaGAN prompt interpolation example

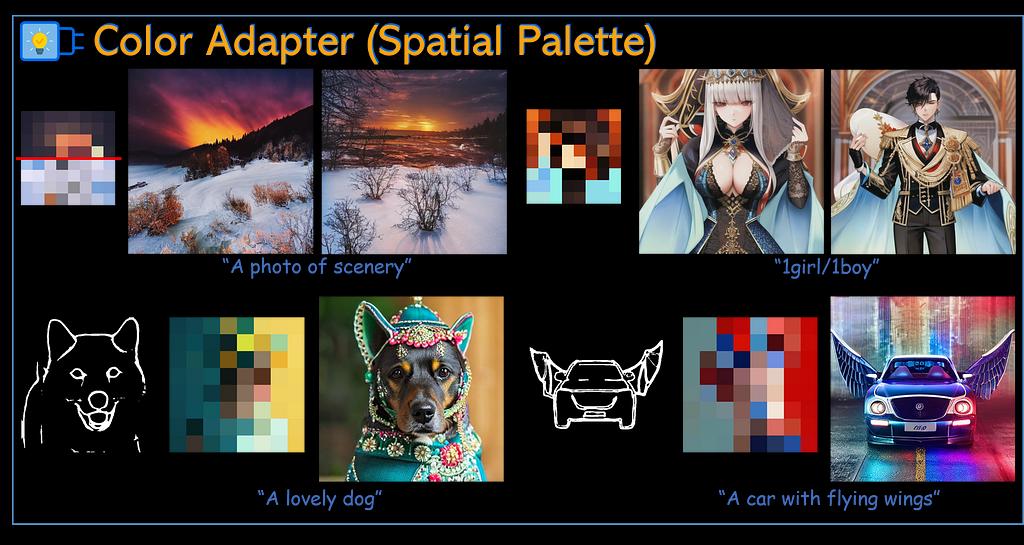

T2I-Adapter updates

Remember last week when I fanboyed about Composer being able to let you control the color palette of images? Well, it seems right after that issue went out, TencentArc published two new T2I-Adapters. Style Adapter lets you use a reference image and Color Adapter a spatial palette (basically a 2d map color palette) for image generation guidance. If you want to try them out, there is a HuggingFace demo. You can also download the models and use them with the Automatic1111 ControlNet plugin. There are also canny, depth and pose models for Stable Diffusion v2.1 available as of late. Checkout the resources section below.

T2I Color Adapter (Spatial Palette)

Word-As-Image for Semantic Typography

I sometimes design logos (either for clients or friends) and Word-As-Image seems like a great way to find inspiration for designing an icon that goes along well with a specific font. The model is capable of transforming letters of a written word to semantically illustrate its meaning. For example transforming the shape of the letter “C” in the word cat, well, into a cat. To take this even further one could take that shape and stylize it with Stable Diffusion using ControlNet or the SDv2 depth2img model as illustrated on the project page.

Word-As-Image examples

Video-P2P: Video Editing with Cross-attention Control

We’ve already seen a few video editing models in the past few months. Video-P2P is the newest addition to this category. Quality for video production maybe isn’t there yet, but it’s always interesting to see specific tasks get better over time.

Video-P2P example: A Spider-Man ~~man~~ is driving a motorbike in the forest

X-Avatar: Expressive Human Avatars

Who wants to be a 3D VTuber? X-Avatar is a novel avatar model that captures the full expressiveness of digital humans to bring about life-like experiences in telepresence and AR/VR. The method models bodies, hands, facial expressions and appearance in a holistic fashion and can be learned from either full 3D scans or RGB-D data. If VTubing isn’t what you’re looking for (I wouldn’t blame you), X-Avatar might also be a good tool for just creating animations for movies or shorts.

X-Avatar examples

SadTalker

Last year, ANGIE was one of my most favourite papers, presenting the ability to generate videos of talking and gesturing people with only an input image and audio file. Unfortunately, to this date, the code stays behind closed doors. But there seems to be a promising alternative called SadTalker, although this one focuses only on animating faces without the gesturing part, the results look smooth and good on a wide array of different styles.

SadTalker examples

@WonderDynamics introduced their product Wonder Studio – an AI tool that automatically animates, lights and composes CG characters into a live-action scene. You can signup for the closed beta on their website. Just imagine combining this with a high quality 3D avatar generator and boom, infinite possibilities.

@alejandroaules published his book about Aztec lore produced using his own fine-tuned Stable Diffusion models (after having been temporarily banned from Midjourney for generating some graphic imagery). The full digital book can be bought on Gumroad.

@_bsprouts made a cool short animation using Gen1. I got to play around with it this week myself and the current 3 second limit is a bit of a pain, making me appreciate @_bsprouts endeavour to push through it and show us what’s possible.

Imagination: Interview & Inspiration

In Today’s issue of AI Art Weekly, we talk to AI artist Glenn Marshall. Glenn is a 2 Prix Ars Electronica awards and Cannes Short Film winner. While I do enjoy still image art, there is something that pumps my heart when it comes to animation, and Glenn definitely delivers on that front, so let’s jump in.

[AI Art Weekly] Glenn, what’s your background and how did you get into AI art?

I first started working with 3D animation about 25 years ago. Around 15 years ago, I shifted my focus entirely to generative art using code. This field was much more exciting and innovative to me. I became fascinated with the abstract and metaphysical aspects of art and how to convey philosophical ideas using numbers and algorithms.

Soon after, the development of text-to-image synthesis with AI began, and I was an early adopter of “Big Sleep,” an open-source Google Colab project created by Ryan Murdock. Although rudimentary, it was revolutionary. I could articulate my imagination and watch as it was visualized by a machine, which sparked new creative areas of my brain.

“Fight!” by Glenn Marshall

[AI Art Weekly] Do you have a specific project you’re currently working on? What is it?

Yes, it’s quite exciting, actually. I currently have short and feature films in development, and I’m using AI and Virtual Production (The Volume). I will be shooting in a few weeks and using AI for production design, as well as stylizing and rotoscoping live action elements. Unfortunately, I can’t provide many more details at the moment!

[AI Art Weekly] What drives you to create?

This new technology allows for the creation of things that have never been seen before and enables storytelling in ways that were previously impossible.

“Standing forever” by Glenn Marshall

[AI Art Weekly] What does your workflow look like?

Browsing YouTube for videos that I could take and transform. Creating videos and animations has always been my main thing with AI. With Automatic1111, fine tuned stable diffusion models, ControlNet and other tools, the creative possibilities are getting crazier by the day and we’ve definitely reached the stage where AI is a serious creative tool for mainstream TV, film and Animation productions.

[AI Art Weekly] What is your favourite prompt when creating art?

I have never been a prompt wizard. Instead, I rely on transforming videos and stills into something unique using simple styling prompts. For instance, I have enjoyed applying the Giuseppe Arcimboldo, art nouveau style to some mindfulness coloring pages, using ControlNet to work on a piece by Escher or simply applying the Pre-Raphaelite painting style to a Robert Palmer music video.

“The end of the world is nigh.” by Glenn Marshall

[AI Art Weekly] How do you imagine AI (art) will be impacting society in the near future?

To say that this will cause disruption would be an understatement. Ironically, I believe human artists will become more valued. We’ve just gone through the phase of people saying “wow, can you believe a computer made this amazing art?”. But now, it’s so easy for anyone to create amazing AI art, that one day soon we might see a “real” painting and say “wow, can you believe a human made this amazing art?”.

[AI Art Weekly] Who is your favourite artist?

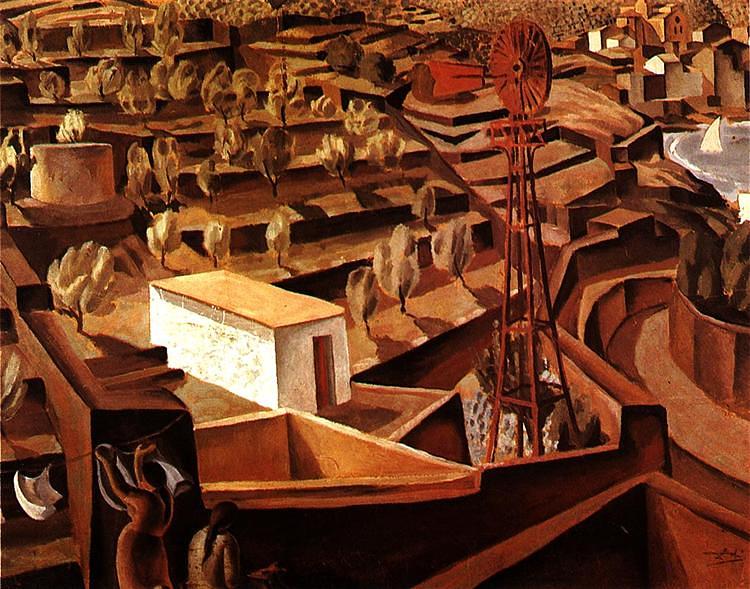

The Surrealists, namely Dali, Magritte, and Escher, are the obvious ones. I believe they would have been blown away by AI.

“Landscape of Cadaques” by Salvador Dali

[AI Art Weekly] Anything else you would like to share?

Thierry Frémaux, director of the Cannes Film Festival, wrote to congratulate me on the success of my AI film, ‘The Crow.’ He also informed me that his friend, Mylene Farmer, France’s top female pop star, was interested in using it during her concerts this summer. So I gave it to her to use!

Creation: Tools & Tutorials

These are some of the most interesting resources I’ve come across this week.

ControlNet only worked with Stable Diffusion 1.5 so far as the models have to specifically trained with the same base model. There are now canny, depth and pose models which work with SDv2.

There is a new pose plugin for Automatic1111 in town which lets you stage poses with multiple bodies in 3D directly within the web interface.

One thing Posex can’t do though, is staging hands correctly. This is were the depth map library comes in. In it you find a bunch of prerendered depth map hands which you can combine with the pose images from Posex to generate the hands that you actually want.

If you’re looking for a free to use video-editor, CapCut seems like a good choice. @superturboyeah created a short music video using stills created with Midjourney with it.

@weird_momma_x shared with me some resources she uses for blending with her work. @smithsonian Open Access is a platform where you can download, share, and reuse millions of the Smithsonian’s images—right now, without asking. Perfect if you’re having concerns about the ethics of using input images when working with AI. There are also a few more resources momma shared in the Discord.

A blend of some old work by @moelucio and me, created by @moelucio

And that my fellow dreamers, concludes yet another AI Art weekly issue. Please consider supporting this newsletter by:

- Sharing it 🙏❤️

- Following me on Twitter: @dreamingtulpa

- Leaving a Review on Product Hunt

- Using one of our affiliate links at https://aiartweekly.com/support

Reply to this email if you have any feedback or ideas for this newsletter.

Thanks for reading and talk to you next week!

– dreamingtulpa